Scenario: 1

A multinational company is developing a sophisticated web application

that requires integration with multiple

third-party APIs. The company’s unique keys for each API are hardcoded

inside an AWS CloudFormation template.

The security team requires that the keys be passed into the template

without exposing their values in plaintext.

Moreover, the keys must be encrypted at rest and in transit.

As secuity Engineer within the team, which measure will implement to

provides the HIGHEST level of security while meeting these requirements?

Comment Loading

Question : 2

A startup is testing its in-house application code using an Amazon EC2

instance and recently discovered that the

instance was compromised and was serving up malware. Further examination

of the instance revealed that it was

compromised 30 days ago.

To prevent it from happening again, the startup urgently asked the

security engineer to implement a continuous

monitoring solution that automatically notifies the security team about

compromised instances via email for

high-severity findings.

Which combination of steps should the security engineer take to

implement the solution?

Amazon GuardDuty creates an event for Amazon EventBridge (Amazon CloudWatch

Events) when any change in findings takes

place. Finding changes that will create a CloudWatch event include newly

generated findings or newly aggregated

findings. Events are emitted on a best-effort basis.

Every GuardDuty finding is assigned a finding ID. GuardDuty creates a

CloudWatch event for every finding with a unique

finding ID. All subsequent occurrences of an existing finding are aggregated

to the original finding.

An Amazon EventBridge rule with GuardDuty can be used to set up automated

finding alerts by sending GuardDuty finding

events to a messaging hub to help increase the visibility of GuardDuty

findings. You can send findings alerts to email,

Slack, or Amazon Chime by setting up an SNS topic and then connecting that

topic to an Amazon EventBridge event rule.

Users can create a custom event pattern with the EventBridge rule to match a

specific finding type. Then, route the

response to an Amazon Simple Notification Service (Amazon SNS) topic.

– Enable the Amazon GuardDuty service in the AWS account that needs to be

monitored.

– Set up an Amazon SNS topic, then subscribe the security team’s emails to

the topic.

– Create an Amazon EventBridge rule for GuardDuty findings with high

severity. Set up the rule to publish a message on

the topic.

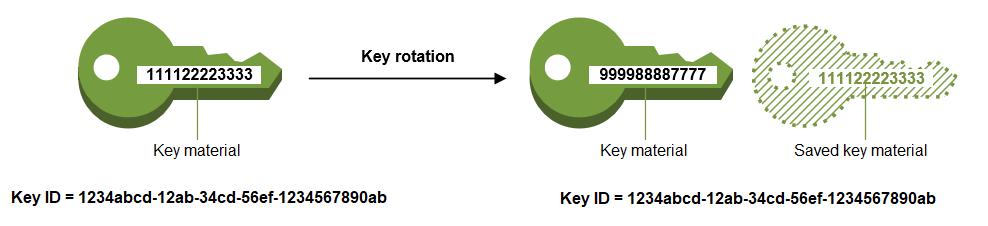

A company wants to use AWS Key Management Service (KMS) to encrypt confidential documents owned by different organizations. The Security team must have full control over how the KMS keys are used, however, they don’t want the operational overhead of rotating keys annually. How will you as a security engineer go about this

Cryptographic best practices discourage extensive reuse of encryption keys.

To create new cryptographic material for

your AWS Key Management Service (AWS KMS) KMS keys, you can create a new KMS

key and then change your applications or

aliases to use the new KMS key. Or, you can enable automatic key rotation

for an existing customer-managed CMK.

When you enable automatic key rotation for a customer-managed key, AWS KMS

generates new cryptographic material for the

key every year. AWS KMS also saves the KMS key’s older cryptographic

material in perpetuity so it can be used to decrypt

data that it encrypted. AWS KMS does not delete any rotated key material

until you delete the CMK. Key rotation changes

only the CMK’s backing key, which is the cryptographic material that is used

in encryption operations. The CMK is the

same logical resource, regardless of whether or how many times its backing

key changes.

Key rotation in AWS KMS is a cryptographic best practice that is designed to be transparent and easy to use. AWS KMS supports optional automatic key rotation only for customer-managed keys.

Key point: Use symmetric customer managed key to acomplished the job

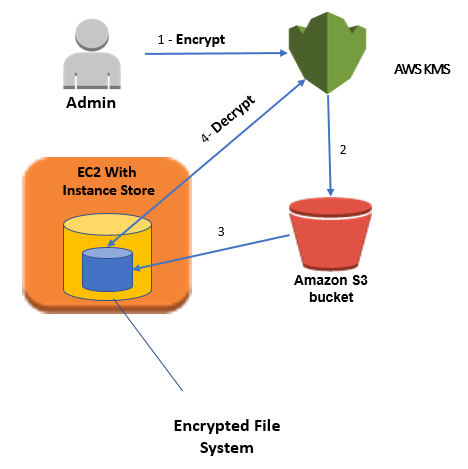

A media company runs a Python script that uses the AWS CLI command aws

s3 cp to upload a large file to an Amazon S3

bucket, which includes an AWS KMS key. An Access Denied error always

shows up whenever their developers upload a file

with a size of 10 GB or more. However, when they tried to upload a

smaller file with the KMS key, the upload succeeds.

How do you resolve this issue?

If you are getting an Access Denied error when trying to upload a large file to your S3 bucket with an upload request that includes an AWS KMS key, you have to confirm that you have the permission to performkms:Decrypt actions on the AWS KMS key that you’re using to encrypt the object

kms:Decrypt is only one of the actions that you must have permissions to when you upload or download an Amazon S3 object encrypted with an AWS KMS key. You must also have permissions to kms:Encrypt, kms:ReEncrypt*, kms:GenerateDataKey*, and kms:DescribeKey actions

The AWS CLI (aws s3 commands), AWS SDKs, and many third-party programs automatically perform a multipart upload when the file is large. To perform a multipart upload with encryption using an AWS KMS key, the requester must have permission to the kms:Decrypt action on the key. This permission is required because Amazon S3 must decrypt and read data from the encrypted file parts before it completes the multipart upload.

Key points:

1. The AWS CLI S3 commands perform a multipart upload when the file is

large.

2. The IAM policy of the developer does not include the kms:Decrypt

permission.

Reference:

https://aws.amazon.com/premiumsupport/knowledge-center/s3-large-file-encryption-kms-key

https://docs.aws.amazon.com/cli/latest/userguide/cli-services-s3-commands.html#using-s3-commands-managing-objects

A Security Administrator is troubleshooting an issue whereby an IAM user

with full EC2 permissions could not start an

EC2 instance after it was stopped for server maintenance. The instance

state would change to Pending when the user tries

to start the instance, however, it would go back to Stopped state right

after a few seconds. As per the initial

investigation, there are EBS volumes attached to the instance that were

encrypted with a KMS Key. The Administrator

noticed that the IAM user was able to start the EC2 instance when the

encrypted volumes were detached. The Administrator

also confirmed that the KMS key in the user policy is correct.

{

"Version": "2012-10-17",

"Statement":[

{

"Sid": "IAMUserPolicyBatanes",

"Effect": "Allow",

"Action": [

"ec2:*"

],

"Resource": "arn:aws:kms:us-east-2:01234567890:key/batanes-key"

}

]

}

What are the key things that the Administrator is missing in the IAM user policy that can solve this issue? (Select TWO.)

Overview.

AWS KMS supports two resource-based access control mechanisms: key policies and grants. With grants, you can

programmatically delegate the use of KMS keys to other AWS principals. You can use them to allow access, but not deny

it. Because grants can be very specific, and are easy to create and revoke, they are often used to provide temporary

permissions or more granular permissions.

You can also use key policies to allow other principals to access a KMS Key, but key policies work best for relatively

static permission assignments. Also, key policies use the standard permissions model for AWS policies in which users

either have or do not have permission to perform an action with a resource. For example, users with the kms:PutKeyPolicy

permission for a KMS Key can completely replace the key policy for a KMS Key with a different key policy of their

choice. To enable more granular permissions management, use grants.

You can specify conditions in the key policies and AWS Identity and Access Management policies (IAM policies) that

control access to AWS KMS resources. The policy statement is effective only when the conditions are true. For example,

you might want a policy statement to take effect only after a specific date. Or, you might want a policy statement to

control access only when a specific value appears in an API request.

Key Points:

1. Add kms:CreateGrant in the Action element.

2. Add “Condition”: { “Bool”: { “kms:GrantIsForAWSResource”: true }}.

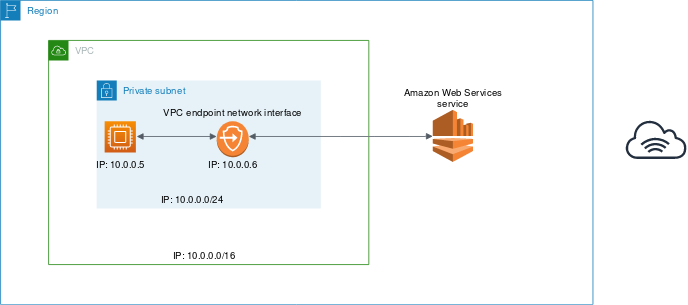

A company has an application that heavily uses AWS KMS to encrypt financial data. A Security Engineer has been

instructed to ensure that communications between the company’s VPC and AWS KMS do not pass through the public Internet.

Which combination of steps are suitable solution in this scenario?

You can connect directly to AWS KMS through a private endpoint in your VPC instead of connecting over the internet. When

you use a VPC endpoint, communication between your VPC and AWS KMS is conducted entirely within the AWS network. AWS KMS

supports Amazon Virtual Private Cloud (Amazon VPC) interface endpoints that are powered by AWS PrivateLink.

Each VPC endpoint is represented by one or more Elastic Network Interfaces (ENIs) with private IP addresses in your VPC

subnets. The VPC interface endpoint connects your VPC directly to AWS KMS without an internet gateway, NAT device, VPN

connection, or AWS Direct Connect connection. The instances in your VPC do not need public IP addresses to communicate

with AWS KMS.

If you use the default domain name servers (AmazonProvidedDNS) and enable private DNS hostnames for your VPC endpoint,

you do not need to specify the endpoint URL. AWS populates your VPC name server with private zone data, so the public

KMS endpoint (https://kms.(region).amazonaws.com) resolves to your private VPC endpoint. To enable this feature when

using your own name servers, forward requests for the KMS domain to the VPC name server.

You can also use AWS CloudTrail logs to audit your use of KMS keys through the VPC endpoint. And you can use the

conditions in IAM and key policies to deny access to any request that does not come from a specified VPC or VPC

endpoint.

Key Points:

1. Modify the AWS KMS key policy to include the aws:sourceVpce condition and reference the VPC endpoint ID.

2.Set up a new VPC endpoint for AWS KMS with private DNS enabled.

Reference:

https://docs.aws.amazon.com/kms/latest/developerguide

https://aws.amazon.com/kms/features/

https://aws.amazon.com/kms/faqs/

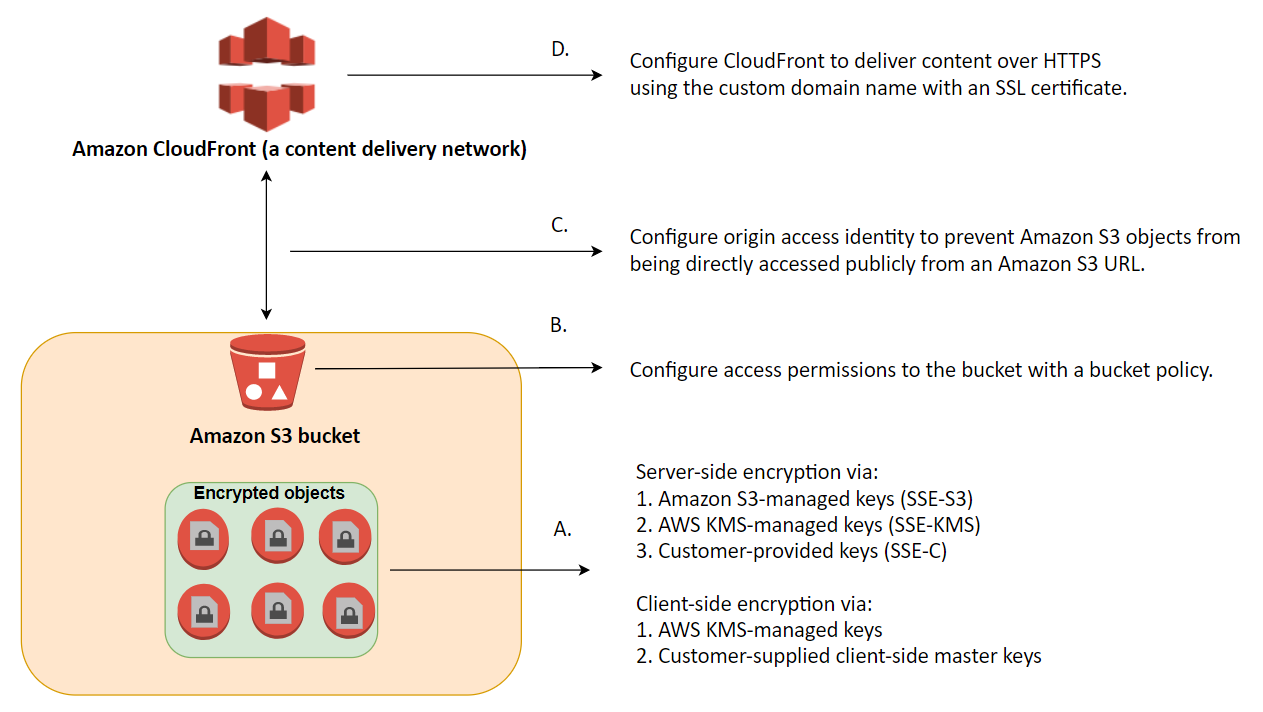

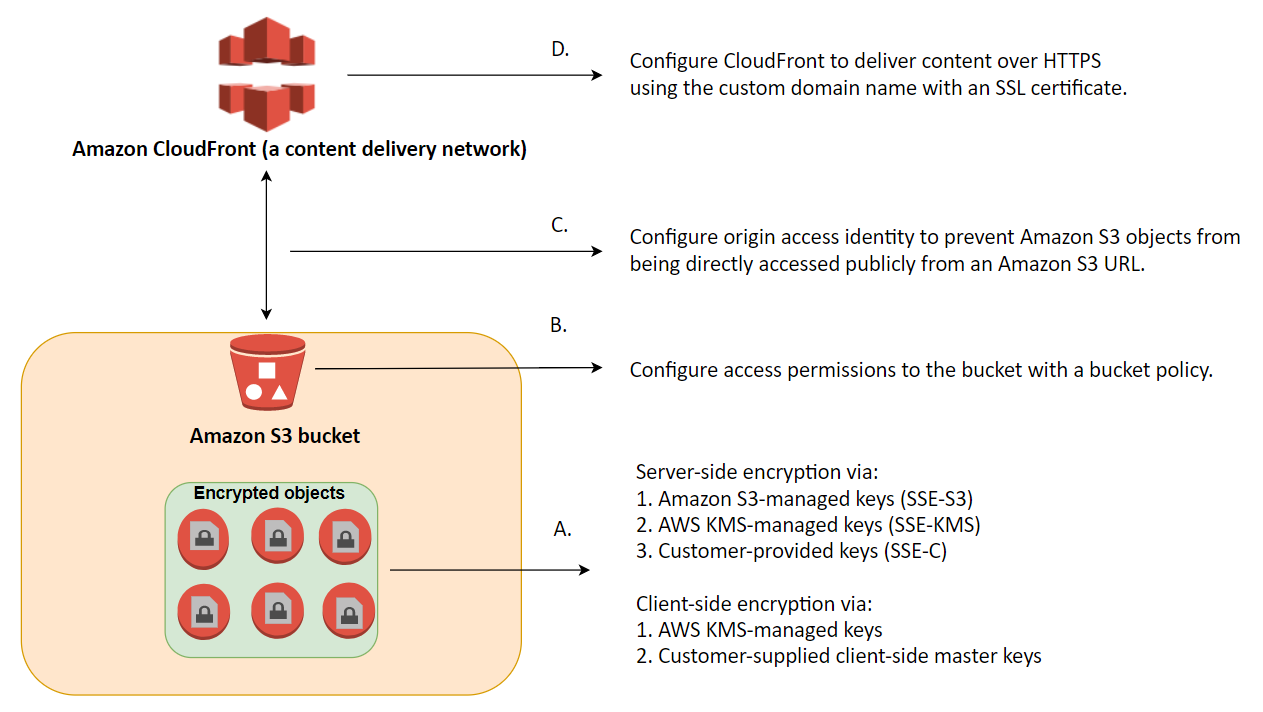

An e-commerce website is hosted in an Auto Scaling group of EC2 instances that stores static data in an S3 bucket. The Security Administrator must ensure that the data is encrypted at rest using an encryption key that is both provided and managed by the company. To comply with the IT security policy, the solution must use AES-256 encryption to protect the data that the website is storing.

Data protection refers to protecting data while in-transit (as it travels to and from Amazon S3) and at rest (while it

is stored on disks in Amazon S3 data centers). You can protect data in transit by using SSL or by using client-side

encryption.

You have the following options for protecting data at rest in Amazon S3:

Use Server-Side Encryption – You request Amazon S3 to encrypt your object before saving it on disks in its data centers

and decrypt it when you download the objects.

Use Client-Side Encryption – You can encrypt data client-side and upload the encrypted data to Amazon S3. In this case,

you manage the encryption process, the encryption keys, and related tools.

1. Use Client-Side Encryption with AWS KMS

2. Use Client-Side Encryption Using a Client-Side Master Key

Key Points:

1. Specify server-side encryption with customer-provided keys (SSE-C) during object creation using the REST API.

2. Encrypt the data on the client-side before sending to Amazon S3 using their own master key.

Question: 3

A Security Engineer found out that API logging was disabled in the

corporate AWS production account. The Engineer also

noticed that the root IAM user was used to create new API keys without

approval.

As a security Engineer within the corporation, What should you do to

detect and automatically remediate these types of security incidents?

Comment Loading

Question : 4

A Security Administrator is tasked to set up an automated system to

manage the access keys in the company’s AWS account.

A solution must be implemented to automatically disable all IAM user

access keys that are more than 90 days old.

How do you implement this

Comment Loading

Question: 5

A Security Engineer refactored an application to remove the hardcoded

Amazon RDS database credential from the

application and store it to AWS Secrets Manager instead. The application

works fine after the code change. For improved

data security, the Engineer enabled rotation of the credential in

Secrets Manager and then set the rotation to change

every 30 days. The change was done successfully without any issues but

after a short while, the application is getting

an authentication error whenever it connects to the database.

What is the MOST likely cause of this issue?

Comment Loading

Scenario: 1

A website is hosted in an Auto Scaling group of EC2 instances behind an

Application Load Balancer in

US West (N. California) region. There is a new requirement to place a

CloudFront distribution in front

of the load balancer to improve the site's latency and lower the load on

the

origin servers. The Security

Engineer must implement HTTPS communication from the client to

CloudFront

and then from

CloudFront to the load balancer. A custom domain name must be used for

your

distribution and the

SSL/TLS certificate should be generated from AWS Certificate Manager

(ACM).

How many certificates should be generated by the Engineer in this

scenario?

Scenario: 2

Welcome to Home Depot!. You have just joined the team and your first

task is to enhance security for the company

website. The site runs on Linux, PHP and Apache and uses an EC2 an

autoscaling group behind an Application Load Balancer

(ALB). After an initial architecture assessment you have found multiple

vulnerabilities and configuration issues. The

dev team is swamped and will not be able to remediate code level issues

for several weeks. Your mission in this workshop

round is to build an effective set of controls that mitigate common

attack vectors against web applications, and provide

you with the monitoring capabilities needed to react to emerging threats

when they occur.

An organization is implementing a security policy in which their

cloud-based users must be contained in a separate

authentication domain and prevented from accessing on-premises systems.

Their IT Operations team is launching and

maintaining a number of Amazon RDS for SQL Server databases and EC2

instances. The organization also has an on-premises

Active Directory service that contains the administrator accounts that

must have access to the databases and EC2

instances.

How would the Security Engineer manage the AWS resources of the

organization in the MOST secure manner?

Comment Loading

Question: 2

We found out that anyone from the Internet can bypass CloudFront that we

have configured for security

and open the app skipping protection we have from the components at the

Edge. Meaning: the

Application Load Balancer can be an easier target for an attack and a

weak

spot. Help us to fix that!

Question : 3

A company is using AWS CloudTrail to log all AWS API activity for all

AWS Regions in all of its accounts. The company

wants to protect the integrity of the log files in a central location.

Which combination of steps will protect the log files from alteration?

To log all AWS API activity to a central location we provid all the AWS

accounts the ability to push their logs to a

central S3 bucket. Thus

1. Create a central Amazon S3 bucket in a dedicated log account. In the

member accounts, grant CloudTrail access to write

logs to this bucket.

After Creating a central S3 bucket in a dedicated log account. we need to

determine whether a log file was modified, deleted, or

unchanged after CloudTrail delivered it, we can use CloudTrail log file

integrity validation. CloudTrail log file

integrity validation ensures the integrity of the log files by reporting if

a log file has been deleted or changed. When

we set up an organization trail within Organizations, we can enable log file

integrity validation. The trail logs

activity for all organization accounts in the same S3 bucket. Member

accounts will not be able to delete or modify the

organization trail. Only the management account will be able to delete or

modify the trail for the organization. Thus

2. Enable AWS Organizations for all AWS accounts. Enable CloudTrail with log

file integrity validation while creating an

organization trail. Direct logs from this trail to a central Amazon S3

bucket.

Question: 4

A security engineer must ensure that all infrastructure that is launched

in the company AWS account is monitored for

changes from the compliance rules. All Amazon EC2 instances must be

launched from one of a specified list of Amazon

Machine Images (AMIs), and all attached Amazon Elastic Block Storage

(Amazon EBS) volumes must be encrypted. Instances

that are not in compliance must be terminated.

Which combination of steps should the security engineer implement to

meet these requirements?

AWS Config is used to monitor the configuration of AWS resources in your AWS

account. It ensures compliance with

internal policies and best practices by auditing and troubleshooting

configuration changes.

Thus,

1. Monitor compliance with AWS Config rules.

You can set up remediation actions through the AWS Config console or API.

Choose the remediation action you want to

associate from a pre-populated list, or create your own custom remediation

actions by using Systems Manager Automation

runbooks.

2. Create a custom remediation action by using AWS Systems Manager

Automation runbooks.

Question: 5

A company wants to make available an Amazon S3 bucket to a vendor so

that the vendor can analyze the log files in the S3

bucket. The company has created an IAM role that grants access to the S3

bucket. The company also has to set up a trust

policy that specifies the vendor account.

Which pieces of information are required as arguments in the API calls

to access the S3 bucket?

The ARN of the IAM role you want to assume needs to be specified as an

argument in the API call. The IAM role is created

in the company's account. A trust policy is established and attached to the

IAM role so that the vendor can assume the

role.

1. The Amazon Resource Name (ARN) of the IAM role in the company's account

You must always specify the external ID in your AssumeRole API calls. The

external ID allows the user that is assuming

the role to assert the circumstances in which they are operating. It also

provides a way for the account owner to permit

the role to be assumed only under specific circumstances.

2. An external ID originally provided by the vendor to the company

The LORD appeared to us in the past, saying: “I have loved you with an everlasting love; I have drawn you with unfailing kindness.(Jeremiah 31:3, NIV)

If you want to stay at the top of your career, you have to keep on learning. No one was created to depend on the other, no one was created to be a bagger, We were all created in the image of God and empowered by God to do greater things, We are all equip and bless with potentials, talent and gifts. Join us to make a different in our world

The LORD appeared to us in the past, saying: “I have loved you with an everlasting love; I have drawn you with unfailing kindness.(Jeremiah 31:3, NIV)

If you want to stay at the top of your career, you have to keep on learning. No one was created to depend on the other, no one was created to be a bagger, We were all created in the image of God and empowered by God to do greater things, We are all equip and bless with potentials, talent and gifts. Join us to make a different in our world

And so we know and rely on the love God has for us. God is love. Whoever lives in love lives in God, and God in them. ( 1John 4:16 NIV)

If you want to stay at the top of your career, you have to keep on learning. No one was created to depend on the other, no one was created to be a bagger, We were all created in the image of God and empowered by God to do greater things, We are all equip and bless with potentials, talent and gifts. Join us to make a different in our world